Projects

Learning for Robotic Navigation

At a Glance

Objective

Classify regions in rover imagery according to their ease of traverse. Decrease traverse times, avoid getting stuck.Benefit

Novel system classifies terrain underfoot by feel, records the appearance of that terrain, and then uses this appearance to classify far-field scenes.Approach

Combination of Hidden Markov Model (HMM) unsupervised terrain classifier with advanced Support Vector Machine (SVM) visual classifier The resulting system runs in real time on the robot.Accomplishments

The technology was developed during the DARPA LAGR (Learning Applied to Ground Robotics) program, the little brother to the DARPA Grand Challenge.The full system was among the three top finishers in highly competitve field tests done by DARPA management.

It is now being extended and tested in work sponsored by the Army Research Office (ARO).

Details

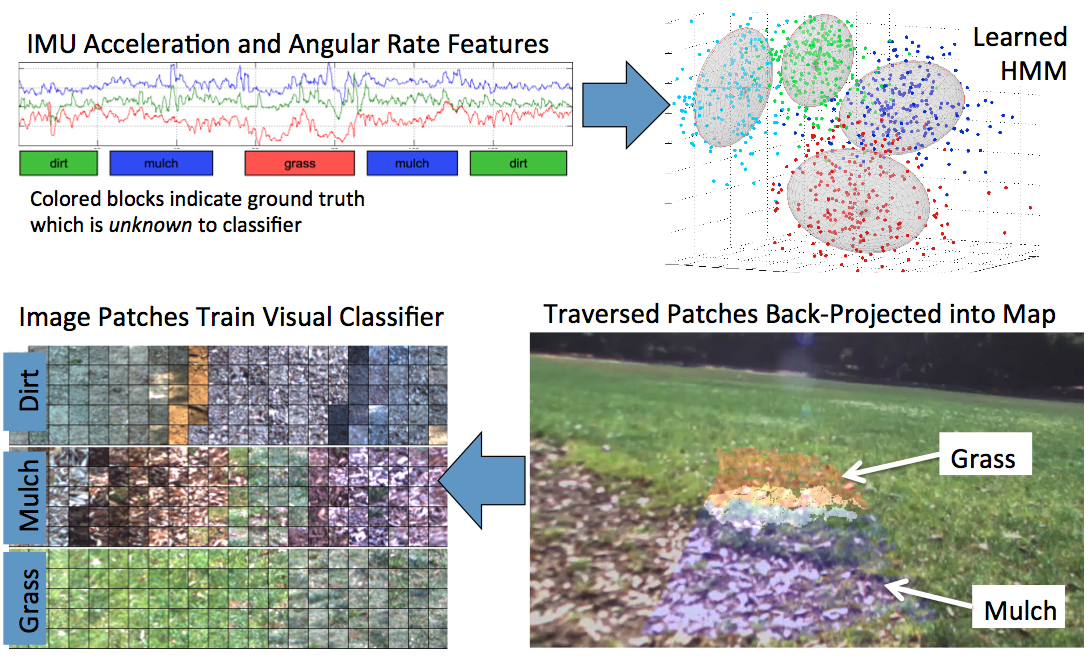

Proprioceptive Classification

Our approach to proprioceptive classification uses extensive logs of high-rate IMU (~100 Hz), slip, and motor current data, collected over diverse terrain types, to provide information for an unsupervised terrain classifier. The unsupervised classifier uses a hidden Markov model (HMM), determined with advanced optimization techniques, to exploit temporal coherence of terrain classes as the vehicle moves. We obtain the number of distinct classes automatically by computing the stability of the clustering solution: the number of classes is increased up to the point where the number of local maxima in the fitness landscape (HMM log-likelihood) grows too large to obtain a stable solution.

Field experiments provide time series to train the HMM. This empirical approach avoids detailed modeling of vehicle/terrain interaction, which can add complexity and cause brittleness when modeling assumptions are violated. Using this approach, we have successfully classified four terrains (grass, mulch, dirt, and asphalt), plus an unknown class for novel terrain, to 90% accuracy, with data collected by a Packbot vehicle over a range of velocities from 0.5-2.0 m/s.

Visual Classification

After a set of classes has been defined and the proprioceptive

classifier has been trained as above, the terrain the vehicle

traversed in the process is labeled by terrain type at a set of

patches along the path. These patches are projected into images and

local maps obtained during the traverse to collect features to train

the near-field classifier, which uses both visual features from the

images and 3-D features from the range data or maps. The image above

illustrates the sequence from acquiring proprioceptive data, through

HMM-based learning, to projecting patches into prior images to

collect visual features for training the near-field classifier;

collecting features from the local maps is analogous.

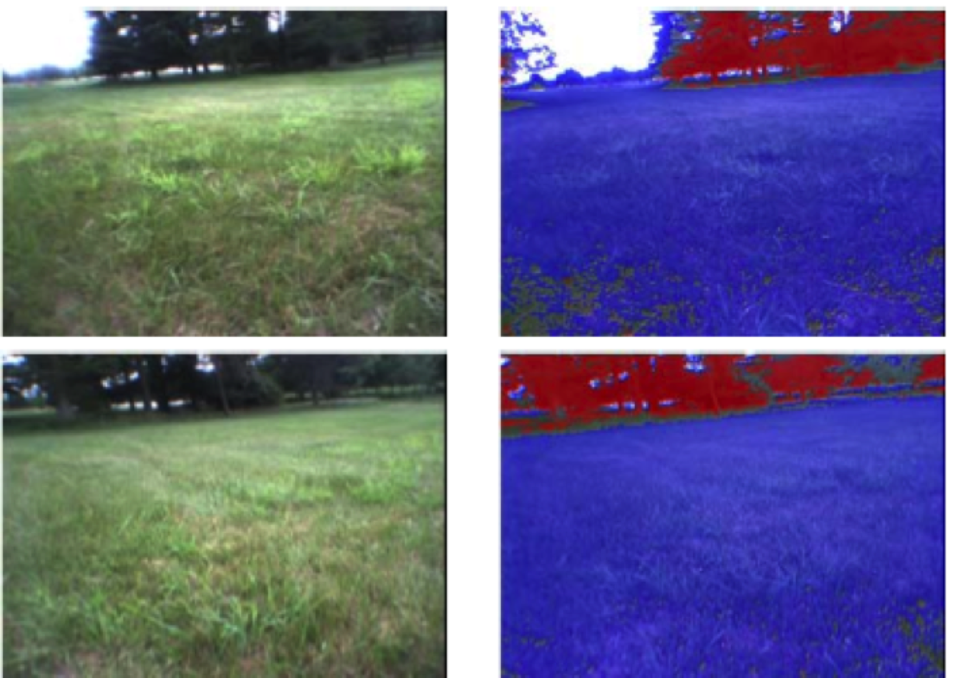

The visual classifier must run in real-time. In prior work with

the DARPA LAGR project, we developed a fast Support Vector Machine

(SVM) classifier using various feature sets that ran at 35 Hz with

640x480 color imagery. The most successful feature set was bivariate

normalized-color (e.g., the a* and b* channels from CIELAB) histograms,

which contain 256 features, with accuracies ranging from 90-96%

depending on field conditions. We found that patch-average normalized

color plus a handful of Gabor textures performed approximately as

well. The visual classifier begins operation with a default parameter

set, but is then trained for existing visual conditions as the robot

drives. In general, we have found that on-line adaptation in the

visual classifier is beneficial in developing a robust system, and

on-the-fly SVM training is a strength of our system. We have also

developed a random fern-based classifier, which can provide increased

speed at the cost of marginal loss in classification accuracy.

Selected Publications

Rankin, A., Bajracharya, M., Huertas, A., Howard, A., Moghaddam, B. and others (2010). “Stereo-vision-based perception capabilities developed during the Robotics Collaborative Technology Alliances program,” SPIE Conference Series, vol. 7692, pp. 76920C-76920C-15. Document.

Bajracharya, Max, Howard, Andrew, Matthies, Larry, Tang, Benyang, and Turmon, Michael (2009). “Autonomous off-road navigation with end-to-end learning for the LAGR program,” Journal of Field Robotics, 26(1), pp. 3–25, Wiley. Document (CL#08-3542).

Bajracharya, Max, Tang, Benyang, Howard, Andrew, Turmon, Michael, and Matthies, Larry (2008). “Learning long-range terrain classification for autonomous navigation,” 2008 IEEE International Conference on Robotics and Automation, pp. 4018–4024. Document (CL#08-0301 (NTR 45146)).

A. Howard, M. Turmon, L. Matthies, B. Tang, A. Angelova, and E. Mjolsness (2006). “Towards learned traversability for robot navigation: From underfoot to the far field,” Journal of Field Robotics, 23(11/12), pp. 1005-1017. Document (link to DOI) (CL#06-3711).